When should institutions begin to execute preliminary CECL calculations?

Nov 8, 2016

When is your financial institution planning to execute preliminary calculations for the FASB’s Current Expected Credit Loss (CECL) model? The answer is much sooner rather than later for about 1 in every 3 bank and credit union professionals surveyed recently by Abrigo.

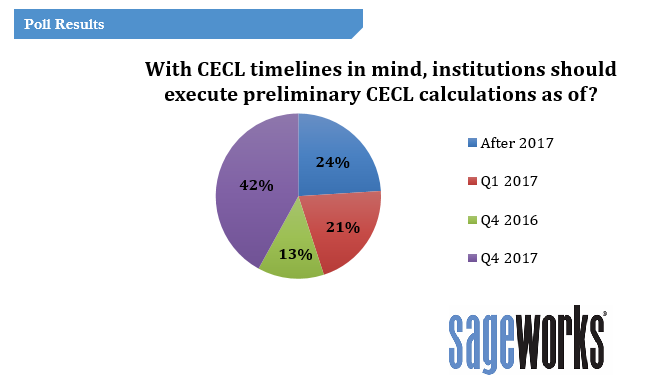

Among 299 individuals attending a recent webinar, CECL – Initial & Subsequent Measures of Loss, 13 percent said their institutions should start these preliminary calculations as of the current quarter (Q4 2016), while 21 percent said they should begin in Q1 2017.

The largest share of poll respondents (42 percent) said that based on what they know about CECL, their institutions should execute preliminary calculations based on the new model during Q4 2017. Another 24 percent said institutions can wait even longer – until after 2017. [See the data release about the poll here.]

During the webinar, Abrigo Senior Risk Management Consultant Neekis Hammond outlined various methodologies that financial institutions will be able to use for their expected loss calculations, and he described the importance of data for implementing the new standard of measuring credit losses. FASB’s issuance of the CECL model in June marked the most significant change in accounting guidance in decades. Institutions are still digesting the implications of the new ruling’s requirements, and many are making strides toward preparing their data for the CECL methodology and parallel allowance for loan and lease losses (ALLL) calculations.

CECL data considerations

For nearly all of the methodologies, Hammond noted, historical data will be critical to being able to develop a full analysis of the loss experience in time for the earliest implementation deadline, which is 2020 for SEC filing institutions. And in general, the more data, the better for financial institutions, so having more institutions executing preliminary calculations in 2017 is good, he said.

“Even though we have historical loan data saved for future calculations, we may be missing attributes that may be brought to light when executing first calculations,” he said. “I hope those institutions that responded with ‘after 2017’ at least entertain attempting these calculations internally to explore the data limitations that they experience when running the numbers.”

Hammond also noted that when determining how far back to capture loan-level data, institutions should consider the comparability of the data. “Once you go back far in the portfolio, you’ve got to look at how many risk rating scales have happened, how many portfolio shifts have happened, what have the underwriting standards been, and then ask – is that data still relevant to the portfolio?”

Hammond also noted that when determining how far back to capture loan-level data, institutions should consider the comparability of the data. “Once you go back far in the portfolio, you’ve got to look at how many risk rating scales have happened, how many portfolio shifts have happened, what have the underwriting standards been, and then ask – is that data still relevant to the portfolio?”

Consequences of delays

The FASB standards don’t dictate when financial institutions should begin testing calculations under the new model, but delaying action could have some unintended consequences, such as:

- Limiting methodology options. “We may be stuck doing a discounted cash flow, which can be very difficult for some of our smaller institutions,” Hammond said. “And some of those smaller institutions are the ones who want to wait the longest, so they’re the least prepared for [using] DCF.”

- Creating additional expenses. Hammond said institutions can encounter additional human capital and third-party costs if data gaps are identified late in the process and assumptions have to be modeled as a result.

- Inhibiting the ability to backtest. Backtesting the model’s accuracy prior to initial implementation is important, and executing tests early provides the ability to do this in plenty of time ahead of implementation.

- Introducing increased volatility. A lower number of observations introduces more volatility into the calculation, Hammond said. “Having a buildup of historical experience is key to reducing volatility post implementation.”

The quality of data will determine what methodologies are available to an institution for executing CECL calculations. The upcoming webinar, Data Quality Considerations for CECL Measurement, on Monday, Nov. 14 at 2 p.m. ET will provide a deeper dive into the data topic. Abrigo Director of Special Research Garver Moore will provide an overview of common data problems faced by institutions during the transition, and he will outline immediate steps institutions can take to ensure that information needed for CECL calculations is accessible and sound in time for implementation. Registration is open here.

Abrigo, a financial information company, provides lending, credit risk and portfolio risk solutions to banks and credit unions.